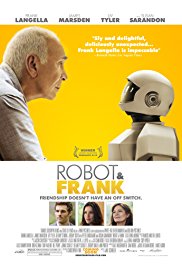

ROBOT AND FRANK

SNIPPET LESSON PLAN: ROBOT ETHICS

Subject: Science/Robots

Ages: 12+; Middle School and High School

Length: Film Clips: Approximately 15 minutes in four film clips.

There is NO AI content on this website. All content on TeachWithMovies.org has been written by human beings.

SNIPPET LESSON PLAN: ROBOT ETHICS

Subject: Science/Robots

Ages: 12+; Middle School and High School

Length: Film Clips: Approximately 15 minutes in four film clips.

Using the Snippet in Class:

Students will learn about ethical issues relating to robots, such as ethics, liability and deception.

Robots are ubiquitous in movies set in the future. Often they are just part of the futuristic backdrop for the story and even when they play a part, they are usually not the focus of the issues addressed by the film. The implications of artificial intelligence and the possibility that robots will gain autonomy and independence from their creators have sparked countless analogies with the story of the creation and the evolution of humankind. A recurring theme is that robots will develop a dignity of their own and that they will begin to have relationship with humans (Blade Runner; A.I.; I, robot).

Most of these films depict scenarios set in distant futures and therefore imaginary realities. However, the fact is that robots are becoming more and more intelligent presenting ethical and moral issues that will need to be addressed relatively soon. The movie Robot and Frank provides a unique opportunity for this, as it is set in a very near future, where the events taking place are relatable (such as a whole library switching to electronic publications, keeping just a few “real” books as relics of the past) and life is not very different from the present. This highlights aspects related to robot ethics that are already now in need of a closer consideration by students.

Although reluctantly, Frank has finally accepted the Robot’s company in his life. As we see in Clip # 1 the Robot’s mission is to keep Frank healthy, and it would be subject to a memory wipe and reprogramming if it failed. Frank starts to even like the Robot when he discovers that he can use it as an accomplice in his burglaries. Clip # 2 shows how Frank discovers that the Robot would actually be able to assist him. Later in the story, Frank is convinced that there is actually more to the Robot than just hardware. He is beginning to anthropomorphize the Robot and would not even be convinced otherwise by the Robot himself, as we can see in Clip # 3. Clip # 4 shows how attached Frank has finally gotten to the Robot and that he is saddened by having to push its “reset” button.

A rebellion of robots against humans and/or the point at which artificial intelligence surpasses that of humans (The Singularity) will undoubtedly bring to the fore important ethical and legal issues, some of which are already being explored in universities, science-fiction novels, and movies. And although there are scholars that are seriously studying this field and preparing for the Singularity, it is commonly misperceived as something in the distant future that is hardly relevant in the present. However, most experts estimate that AI will surpass that of humans within 40 years; some believe it will happen in less than ten years.

However, the use and potential abuse of robots is already taking place at state of the art robotics and AI laboratories. Committees and boards are being set up in different countries and internationally to start dealing with these issues.

As this is a relatively new field, there are many different approaches. We will use here the ideas of Alan Winfield, Professor of Electronic Engineering at the University of the West of England and the Bristol Robotics Laboratory.

Prof. Winfield raises four main areas of concern that affect currently operative robots that are in use in the present.

One concern is that of the responsibility for a robot’s actions. If a robot causes harm due to a malfunction or a wrong decision taken autonomously who is to take the blame and be made to bear the consequences, such as legal liability? Is it the owner, or the designer, or the seller or all three? What safety and insurance regulations are necessary? In Robot and Frank, the robot decides to help Frank in the preparation of his burglaries and in hiding the stolen jewels. Should it be treated as an accomplice or should the manufacturer be liable? In the movie, we can also see that the robot would face a memory wipe and reprogramming if it failed to achieve its purpose of keeping Frank healthy. Would that suffice as a “punishment”? Is anyone actually being “punished” at all in this way?

Another concern is, naturally the weaponization of robots. The use of robots to make decisions about whether to attack and kill humans poses a whole range of ethical and legal problems, beyond the natural repulsion that any killing produces. For example, if no human actually makes the decision to pull the trigger, killing people will become easier and easier, and no one will feel responsible. Or, the question of how robots will be made to comply with ethics and law when designed and used to cause harm or to kill. In a less violent manner, this problem is represented in Robot and Frank by the use Frank makes of the robot’s skill to pick locks and help him in his burglaries.

A third concern focuses on the deception that takes place when robots look like humans and become indistinguishable from them. Is it ethical to allow that such robots exist and to keep humans in the dark about whom they are talking to, believing, trusting, or even taking orders from? There are several movies that deal with this kind of deception, like Sim0ne (2002), The Stepford Wives (2004), or Screamers (1995). But in reality, there are already several very human-looking robots around and this raises another whole set of concerns for the future of robotics and its limits. The robot in Robot and Frank is not deceptive at all, but this issue is also hinted at when it ends up unwillingly “deceiving” Frank into thinking it is a unique self-conscious being with an identity. In fact, it is ironic that it is the robot itself who tries to convince Frank otherwise when Frank refuses to wipe its memory to erase any proof of his own participation in the robbery.

A fourth concern is related to a tendency that is often seen in movies involving robots, the anthropomorphizing of robots and the emotional bond that follows. In fact, often robots do not even have to look like humans or pets to raise feelings that real humans or animals do. Does this make us particularly vulnerable to abuse through robots? Is this bonding dangerous and differently so from what usually happens with animal pets? There are already pet robots or medical aid robots that in a very limited manner accompany humans throughout a great part of their lives. Is the attachment they harness, usually from vulnerable humans, a healthy relationship we should get used to in the future or something to avoid?

A visit to the websites of research institutions on robotics can give a more accurate picture of the state of the art. See, for example,

Bristol Robotics Laboratory

University of Southern California Robotics Research Lab

Note the Assisted Living page from the Bristol Robotics Laboratory which states, “A robot as the interface also has the potential to offer a more social and entertaining interactive experience.” In other words, scientists are working right now on developing the type of robot shown in the movie.

1. Read the Helpful Background section of this Guide.

2. Look for video clips on the Internet of the most up-to-date realistic robot to date. Here is some of the best we’ve found, as of the publication of this lesson click here.

3. Have students or groups of students research and be prepared to present to the class a report on the current state of the art in robotics in various areas, such as the ability to move around in human structures, AI, the ability to hear and interpret language, the ability to see and recognize images, the ability to perform fine motor movements of the hands, the capacity of tactile feeling (being touched), and similarity to human or other animal appearance.

4. Be familiar with the location of the clips on the DVD, check for accuracy of the minute and second locations of the clips on the DVD, and practice getting quickly from one film clip to the other.

1. Have the groups of students present to the class the results of their research on progress in the different aspects of robotics – See Preparation step #3, above. In the alternative, ask students for their views on Robotics, focussing not only on famous robots from fiction but what they think the state of the art might be in real life.

2. Now ask the students for potentially controversial issues they think the advancement of robotics may bring about. Make a list on the board. Several of the students’ ideas can probably be referred back to the four issues described in the Helpful Background section.

3. Show Clip #1 and introduce a brief discussion on the issue of responsibility and liability

4. Show Clip #2 and introduce a brief discussion on the use of robots as weapons.

5. Show Clip #3 and introduce a brief discussion on deception by human-like robots. You may wish to show the class the most recent available clip of a natural looking humanoid robot. As of the publication of this Lesson Plan, click here is some of the best we found.

6. Show Clip #4 and introduce a brief discussion on the anthropomorphizing of robots and the attachment issues.

7. You may optionally give the students an assignment, either individually on in groups, to suggest and/or explain an ethical code for robots that address these issues, such as that proposed by Prof. Alan Winfield in his blog.

1. If students were not given the assignment in Preparation Step #3 before they saw the movie, they will be interested in that assignment now. 2. Students can be asked to research and write an essay or a report to the class (or both) on the following questions:

Who is responsible for the actions of a robot? Address at least, each of the following questions: If a robot causes harm due to a malfunction or a wrong decision taken autonomously, who is to take the blame and who is to be made to bear the consequences such as legal liability? Should it be the owner, the designer, the manufacturer, or the seller or all them? In Robot and Frank the robot decides to help Frank in the preparation of his robberies and in hiding the stolen jewels. Should the robot be treated as an accomplice? In the movie, we can also see that the robot would face a memory wipe and reprogramming if it failed to achieve its purpose of keeping Frank healthy. Would that suffice as a “punishment”? If that is the consequence, is anyone actually being “punished”? In each of your answers, describe the public policy reasons, i.e. what are the effects on society as a whole, for each alternative.

In the circumstances of Robot and Frank is anyone other than Frank liable for the Robot’s actions in helping Frank with the burglaries?

What is the difference between a drone and a robot?

In the not-to-distant future armies will have tanks or gunships that will make the decision about which targets to destroy and which fighters to kill. For example, they could be programmed to kill all people acting in a hostile way (with guns, who do not surrender) and who do not have a badge given only to friendly troops. Its intelligence would be sufficient that it would be better than a soldier at making the determination if a person was an enemy, a combatant, a non-combatant or a friend. Is this type of a robot and its programming defensible as an ethical matter? Who is responsible if this machine makes a mistake and kills the wrong person?

What is The Singularity and when will we reach it?

At some point in the future, companies will be able to make robots that look like humans and become indistinguishable from them. Is it ethical to allow such robots to exist and to keep humans in the dark about whom they are talking to, believing, trusting, or even taking orders from?

How should we deal with the problem that people have a tendency to anthropomorphize robots and to create emotional bonds with them? In fact, often robots do not even have to look like humans or pets to raise feelings of friendship or caring. There are already pet robots or medical aid robots that in a very limited manner accompany humans throughout a great part of their lives. Does this make humans particularly vulnerable to abuse through robots? Is this bonding dangerous and differently so from what usually happens with animal pets? Is the attachment robots can harness usually from vulnerable humans, as in the movie Robot and Frank, a healthy relationship we should get used to in the future, or something to avoid?

When a robot can think like a human being and have emotions, should that robot be given the rights of a human being? How will the tendency of people to anthropomorphize robots, like Frank did with the robot in the movie, affect your answer to this question.

Professor Alan Winfield’s blog includes a proposal for robot ethics that addresses the concerns described in this lesson plan.

Educational resources on robotics:

Robotics Lessons – 1st – 8th Grades from USC K-12 Stem Education materials (and links in their extensive section “Other Educational Robotics Sites “)

Robots for Kids from Science Kids

This Snippet Lesson Plan was written by Erik Stengler, Ph.D., and James Frieden. It was last revised on October 11, 2013.